There are four factors that affect system performance

- Number of Processors

- Width of the data bus

- Cache Memory

- Clock Speed

Number of Processors

A dual core or quad core processors are where there is more than one CPU (Central Processing Unit) in one chip.

A dual processor has two CPU’s in one chip whereas a quad processor has four CPU’s in one chip.

In theory, the more cores a processors has, the more instructions the processor can execute at the same time, thereby improving system performance.

However, the additional cores means additional heat generation so cooling of the processor is important and additional circuitry is required to coordinate the processing between cores

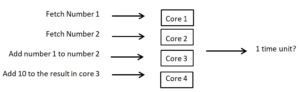

Is a quad core processor 4 times as fast as a single core processor? No. Take the following example:

You may think that a quad core processor could do all 4 processes at the same time.

- Core 3 must wait for cores 1 and 2 to fetch the numbers before it can add them together

- Core 4 must wait for core 3 to finish the addition before it can carry out its instruction

So using a quad processor would be faster than a single core but a 4-fold increase in speed would not happed.

Is a dual core processor where each core runs at 1.5Ghz faster than a 3Ghz single core processor?

No. For the same reason as before one of the cores may have to wait for the other cores to complete an instruction and this would reduce system efficiency.

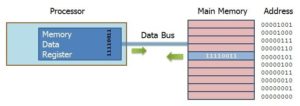

Width of a Data Bus

The width of a data bus is the number of wires in the data bus, each of which can transfer 1-bit of data at a time.

So an 8-bit data bus could transfer 8 bits in a single fetch whereas a 64-bit data bus could transfer 64 bits. Therefore, increasing the width of the data bus should increase system efficiency.

If you think of this in terms of transferring ASCII characters a 64-bit data bus could transfer 8 ASCII characters in the same time it would take an 8-bit data bus to transfer 1 ASCII character.

Increasing the width of the data bus increases the quantity of data that can be carried at one time and so increases the performance of the computer system.

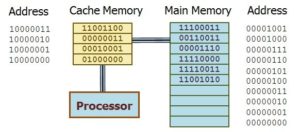

Cache Memory

Cache memory is used to improve system performance. Cache memory operates in the same way as RAM in that it is volatile. When the system is shutdown the contents of cache memory are cleared. Cache memory allows for faster access to data than RAM for two reasons:

- Cache uses Static RAM whereas Main Memory (RAM) uses dynamic RAM

- Cache attempts to store commonly used instructions

Static RAM does not need to be refreshed in the same way that dynamic RAM does.

The Cache memory can improve the computer system performance as:

- Frequently accessed data/instructions are held in cache

- Faster access memory as it is on the same chip as the processor.

- It reduces the need to access slower main memory

- Cache is static RAM (faster)

Clock Speed

The clock is the electronic unit that synchronises all the activities of the processor by generating pulses at a constant rate. The clock speed is the frequency at which the clock generates these pulses and is used as an indicator of the processor’s speed.

An instruction in the CPU can only start on a pulse of the clock, so the higher the clock speed the more instructions can be carried out per second so improving system performance.

Nowadays, clock speeds are measured in Gigahertz (GHz) with a typical processor speed being 3GHz. This means the CPU performs 3 billion cycles a second and if each instruction only took 1 cycle (unrealistic) then the CPU could carry out 3 billion instructions per second.

It may seem simple that to speed up a computer just increase the clock speed, but the faster the clock speed the more heat that is generated and this can overheat and damage the processor.